Evaluation of Power CPU architecture for deep learning

Evaluation of Power CPU architecture for deep learning

Project goal

We are investigating the performance of distributed training and inference of different deep-learning models on a cluster consisting of IBM Power8 CPUs (with NVIDIA V100 GPUs) installed at CERN. A series of deep neural networks is being developed to reproduce the initial steps in the data-processing chain of the DUNE experiment. More specifically, a combination of convolutional neural networks and graph neural networks are being designed to reduce noise and select specific portions of the data to focus on during the reconstruction step (region selector).

Collaborators

Project background

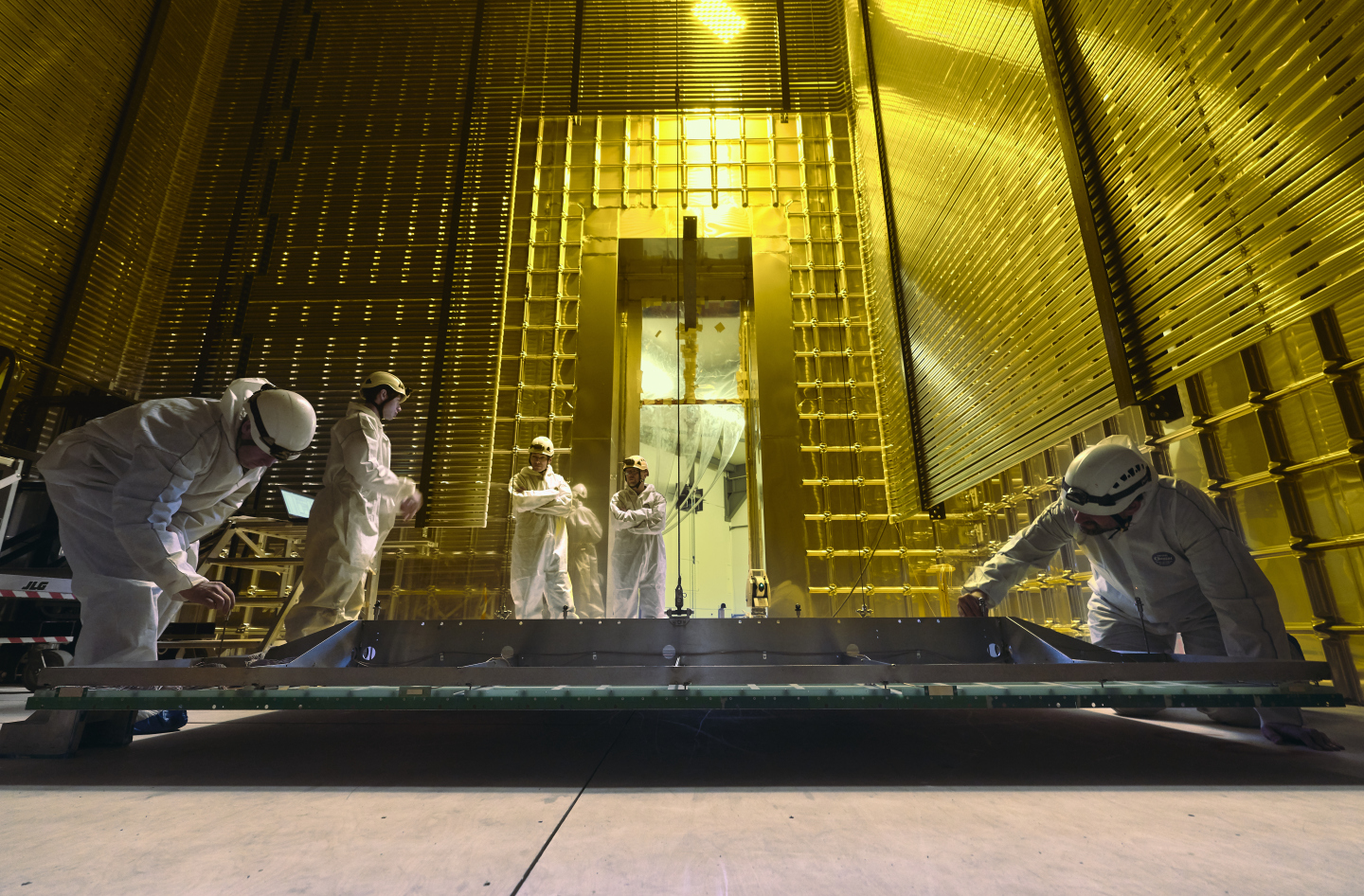

Neutrinos are elusive particles: they have a very low probability of interacting with other matter. In order to maximise the likelihood of detection, neutrino detectors are built as large, sensitive volumes. Such detectors produce very large data sets. Although large in size, these data sets are usually very sparse, meaning dedicated techniques are needed to process them efficiently. Deep-learning methods are being investigated by the community with great success.

Recent progress

We have developed a deep neural network architecture based on a combination of two-dimensional convolutional layers and graphs. These networks can analyse both real and simulated data from protoDUNE and perform the region selection and de-noising tasks, which are usually applied to the raw detector data before any other processing is run.

Both of these methods improve on the classical approaches currently integrated in the experiment software stack. In order to reduce training time and set up hyper-parameter scans, the training process for the networks is parallelised and has been benchmarked on the IBM Minsky cluster.

In accordance with the concept of data-parallel distributed learning, we trained our models on a total of twelve GPUs, distributed over the three nodes that comprise the test Power cluster. Each GPU ingests a unique part of the physics dataset for training the model.

Next steps

Publications

- M. Rossi, S. Vallecorsa, Deep Learning Strategies for ProtoDUNE Raw Data Denoising. Published at Springer Nature, 2022. cern.ch/go/kzj6

Presentations

- A. Hesam, Evaluating IBM POWER Architecture for Deep Learning in High-Energy Physics (23 January). Presented at CERN openlab Technical Workshop, Geneva, 2018. cern.ch/go/7BsK

- D. H. Cámpora Pérez, ML based RICH reconstruction (8 May). Presented at Computing Challenges meeting, Geneva, 2018. cern.ch/go/xwr7

- D. H. Cámpora Pérez, Millions of circles per second. RICH at LHCb at CERN (7 June). Presented as a seminar in the University of Seville, Seville, 2018.

- M. Rossi, Deep Learning strategies for ProtoDUNE raw data denoising (18th May). Presented at 25th International Conference on Computing in High-Energy and Nuclear Physics, vCHEP2021, Geneva, 2021. cern.ch/go/VK7P

- M. Rossi, Slicing with DL at ProtoDUNE-SP (29th November). Presented at 20th International Workshop on Advanced Computing and Analysis Techniques in Physics Research, ACAT2021, Daejeon, 2021. cern.ch/go/Z6jT