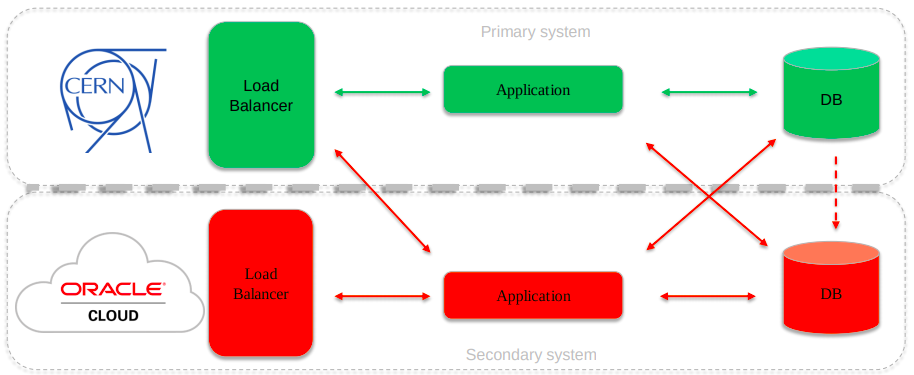

Designing and operating distributed data infrastructures and computing centres poses challenges in areas such as networking, architecture, storage, databases, and cloud. These challenges are amplified and added to when operating at the extremely large scales required by major scientific endeavours. CERN is evaluating different models for increasing computing and data-storage capacity, in order to accommodate the growing needs of the LHC experiments over the next decade. All models present different technological challenges. In addition to increasing the on-premise capacity of the systems used for traditional types of data processing and storage, explorations are being carried out into a number of complementary distributed architectures and specialised capabilities offered by cloud and HPC infrastructures. These will add heterogeneity and flexibility to the data centres, and should enable advances in resource optimisation.