Project goal

The aim of this project is to demonstrate the scalability and performance of Kubernetes and Google Cloud, validating this set-up for future computing models. The focus is on taking both existing and new high-energy physics (HEP) use cases and exploring the best suited and most cost-effective set-up for each of them.

Collaborators

Project background

Looking towards the next-generation tools and infrastructure that will serve HEP use cases, exploring external cloud resources opens up a wide range of new possibilities for improved workload performance. It can also help us to improve efficiency in a cost-effective way.

The project relies on well-established APIs and tools supported by most public cloud providers – particularly Kubernetes and other Cloud Native Computing Foundation (CNCF) projects in its ecosystem – to expand available on-premises resources to Google Cloud. Special focus is put on use cases with spiky usage patterns, as well as those that can benefit from scaling out to large numbers of GPUs and other dedicated accelerators like TPUs.

Both traditional physics analysis and newer computing models based on machine learning are considered by this project.

Recent progress

The year started with consolidation of the work from the previous year. New runs of more traditional physics analysis helped with the validation of Google Cloud as a viable and performant solution for scaling our HEP workloads. A major milestone for this work was the publication of a CERN story on the Google Cloud Platform website (see publications).

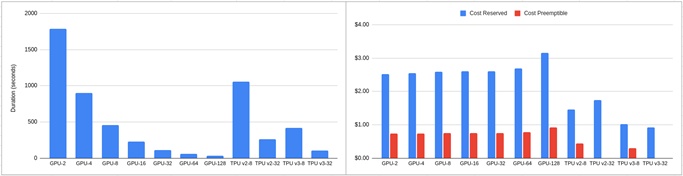

The main focus in 2020 though was on evaluating next-generation workloads at scale. We targeted machine learning in particular, as this places significant requirements on GPUs and other types of accelerators, like TPUs.

In addition to demonstrating that HEP machine-learning workloads can scale out linearly to hundreds of GPUs in parallel, we also demonstrated that public cloud resources have the potential to offer HEP users a way to speed up their workloads by at least an order of magnitude in a cost-effective way.

Next steps

Publications

- R. Rocha, L. Heinrich, Higgs-demo. Published on GitHub. 2019. cern.ch/go/T8QQ

- R. Rocha, Helping researchers at CERN to analyse powerful data and uncover the secrets of our universe. Published at the Google Cloud Platform CERN, 2020. cern.ch/go/Q7Tn

Presentations

- R. Rocha, L. Heinrich, Reperforming a Nobel Prize Discovery on Kubernetes (21 May). Presented at Kubecon Europe 2019, Barcelona, 2019. cern.ch/go/PlC8

- R. Rocha, L. Heinrich, Higgs Analysis on Kubernetes using GCP (19 September). Presented at Google Cloud Summit, Munich, 2019. cern.ch/go/Dj8f

- R. Rocha, L. Heinrich, Reperforming a Nobel Prize Discovery on Kubernetes (7 November). Presented at the 4th International Conference on Computing in High-Energy and Nuclear Physics (CHEP), Adelaide, 2019. cern.ch/go/6Htg

- R. Rocha, L. Heinrich, Deep Dive into the Kubecon Higgs Analysis Demo (5 July). Presented at CERN IT Technical Forum, Geneva, 2019. cern.ch/go/6zls