Global crises, like the COVID-19 pandemic, have highlighted the need to increase the pace at which data is collected, organised, analysed and shared at large scale. This is vital for supporting rapid, informed and accountable response mechanisms from governments and other organisations. Achieving this will play an important role in addressing critical and urgent medical, social, economic and educational challenges.

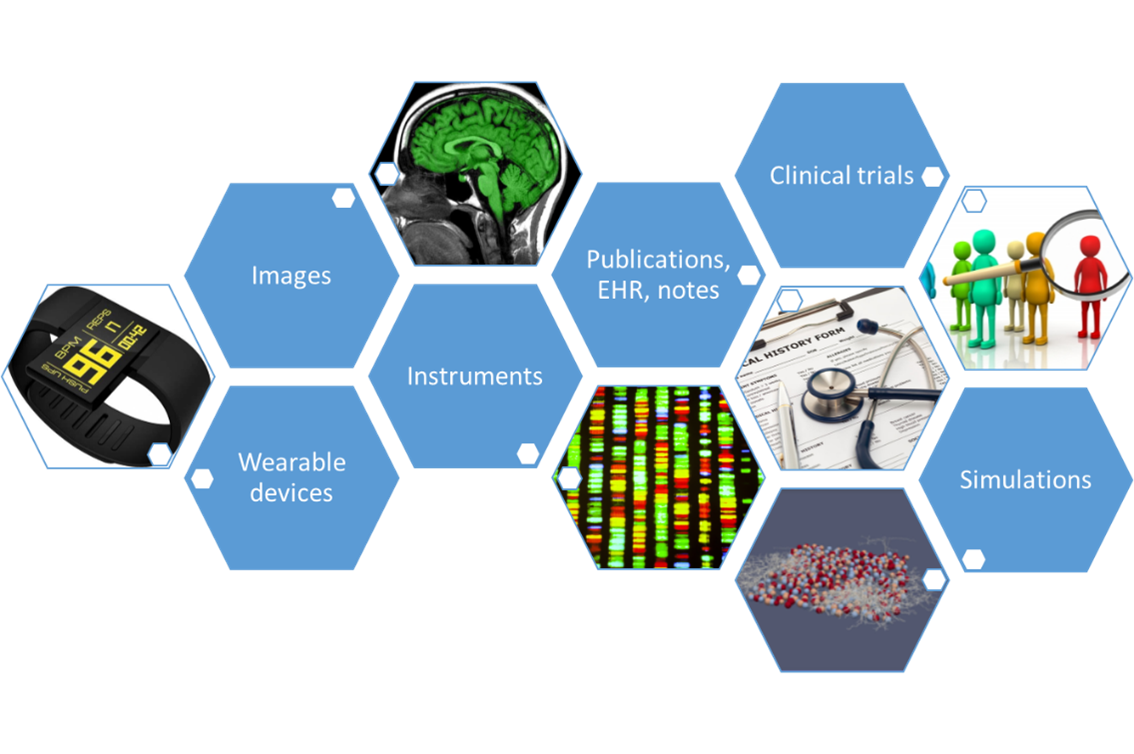

There is a recognised difficulty in implementing large-scale, cross-disciplinary investigations that are able to access large amounts of data from multiple sources. For such investigation to be effective, barriers related to data management, governance, access, scalability and reproducibility must be overcome.

Today, different research groups use different data, different assumptions, different models and different methods. This means they can come to conclusions that cannot be objectively challenged because other research teams do not have access to the same information and cannot reproduce the work. And, in the case of successful research, it can be difficult for other teams to build upon it further.

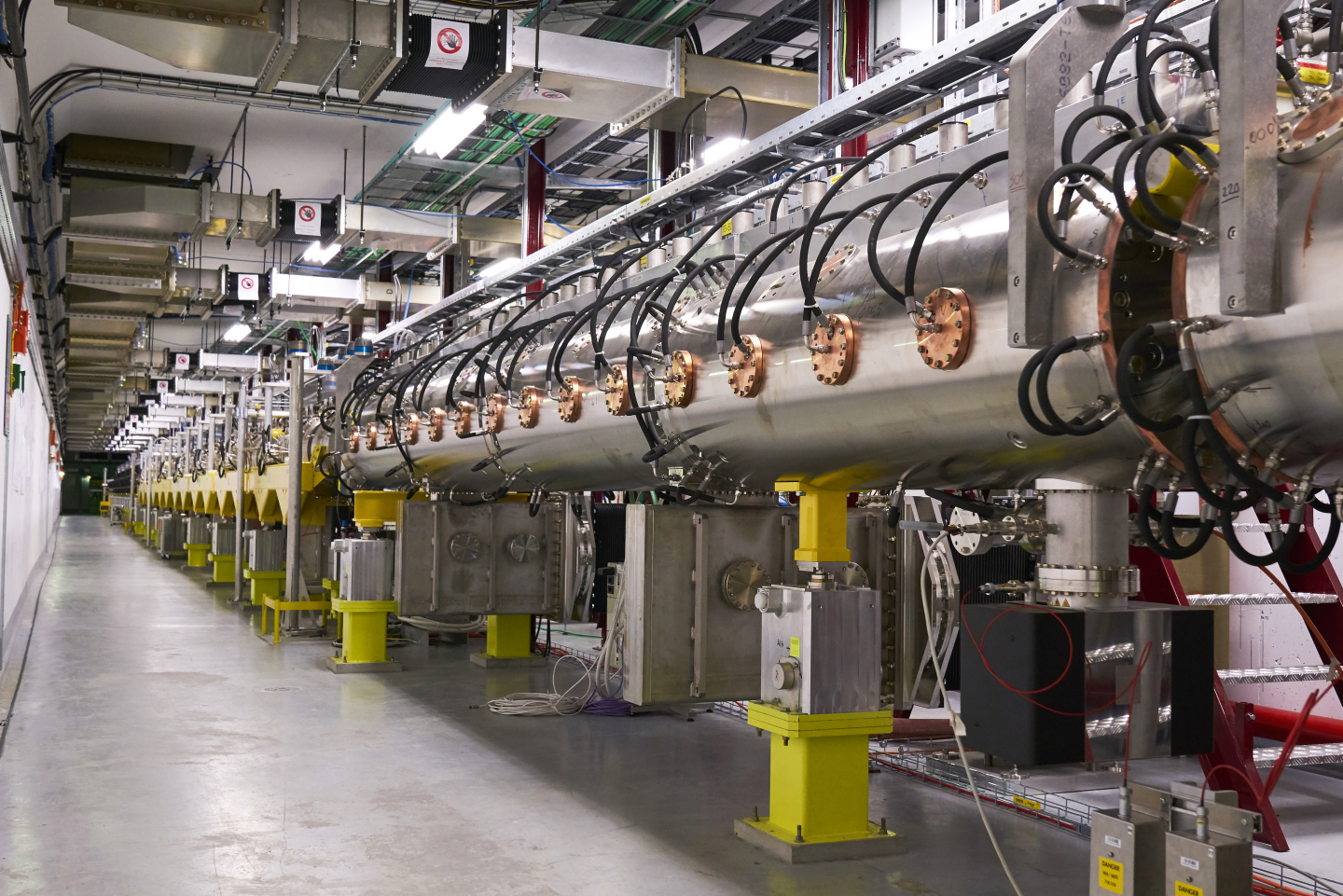

CERN has a long, proven track record for open science and for implementing and managing large-scale, data-driven operations. In collaboration with international initiatives and projects, CERN engineers and physicists have developed efficient strategies for managing data at scale, as well as tools for supporting such strategies. Optimised and efficient systems — combined with the experience of implementing distributed systems and a strong culture of openness and sharing ideas, software and data — make CERN an ideal partner for implementing multi-disciplinary data-driven research projects based on open-access data and open-source tools.

Project timeline

The project started in March 2021 and is set to run for three years.

Year 1: Analysis of use cases, requirements, technology and functional gaps. A minimum-viable-product prototype will be tested with early users.

Year 2: Iterative integration of functions, tools, and best practices.

Year 3: A public beta version will be released and extended to address additional use cases. It will be deployed on infrastructures outside CERN.

(1).png)

(1).png)

.png)